After that last post I got thinking to myself that an automated, random picker would be a simple project, and fun. I figured it’s probably fairly straightforward to run a site-specific google search against VulnHub, pull the download link for a VM, then dump it to a text file. That idea didn’t pan out at all really, but I did get the script built.

Turns out, you need API access to google for running scripts against it. Fair enough, this was a hobby project, so my intended usage was low enough that it was firmly in the free tier. I started looking at putting this together while poking around VulnHub in another tab. I found the RSS links. I’ve not done any API interaction, but I do know that one of python’s strengths is text manipulation. Pivot #1.

So how hard is it to pull a text file, with a very specific format, and dump the content to an array? Not hard at all. The feedparser module pulls this information into an array natively when provided a URL. Easy enough. Except it never loaded. I tested all my ideas in the interpreter, and the url I used, https://www.vulnhub.com/feeds/added/atom/, never seemed to load the variable like all the demos I was seeing. I tried other feeds, same result. Before I looked up anything about feed parser and https, I gave it one shot with http, since none of the samples I found were using it. Bingo. All is well. Er, maybe not. I had previously determined the only way to get a random entry is feed some random function a start and end value, then pull a random number. The end value would be the length of the array we just built. Except that when I tried to check that, I found a short list.

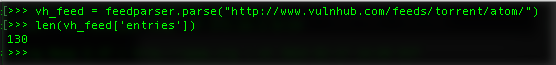

Now, the homepage of VulnHub.com shows there’s 13 pages of at least 3 VMs. That math didn’t work. So I checked the other feeds, and bingo again, the torrent link, http://www.vulnhub.com/feeds/torrent/atom/, has the information I want.

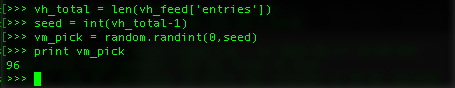

So now I know I have what I need, just need to do a little math.

The next bit is pretty straightforward, revert that index value back into the actual data from the array.

print vh_feed['entries'][vm_pick]['title']

Well then. That’s all nice and fuzzy. Since we’re using this to look at torrents, couldn’t we just pull down the torrent file to our local watched folder? Logically it didn’t seem that hard. wget works just find in the terminal, there must be python stuff for this.

Absolutely.

pick_url = vh_feed['entries'][vm_pick]['link'] vm_pick_filename = path.basename(urlsplit(pick_url).path) vm_pick_filename = "/Users/username/Downloads/" + vm_pick_filename print vm_pick_filename # if block to avoid overwrite existing thing of name if not path.isfile(vm_pick_filename): urlretrieve (pick_url, vm_pick_filename)

That’s it. It’s may not look like much, but it’s got it where it counts. For an exercise, i’m sure I’ll learn more that I can improve it later. If you want to grab it for any reason, feel free.

https://github.com/wea53L/python-utilities/blob/master/pick-a-vm.py